A Pain Scale for On-call?

Being on-call is often a necessary part of the job, particularly for engineers in a SaaS business. The burden of operations often negatively impacts morale and productivity. If we were to estimate the impact on a team, we could build a model based on incident frequency, ticket severity, time of alert versus sleeping schedules, and other operational metrics. Alternatively, we can ask the on-call engineers directly, which should be more accurate, and use the metrics to help drive improvements. This article describes an on-call experience program intended to be integrated into an operational review system.

What causes pain

On-call related pain in a software-as-a-service business comes from a variety of sources including:

- Loss of freedom (need to be available to respond to an alert/tethered to a phone)

- Unplanned interruptions from alerts or new tickets

- Loss of sleep due to alerts (or stress inducing insomnia)

- Escalations and stress from needing to respond rapidly

- Discomfort from having to fix problems in unfamiliar or unpleasant systems

The pain is both physiological and psychological, but also very subjective and, crucially, not directly measurable.

Is it all pain?

No! On-call can be enjoyable. First, joining on-call is a sign of trust from the team that the individual can handle problems on their own. It is a graduation event. Secondly, on-call (ideally) is a time to focus on improvements that help both the clients and the team. Since the scope of improvements is usually small, an engineer can start and finish the effort within a few days. The ability to quickly iterate and make improvements can be very rewarding. On-call can also be a break from other projects, but a “blessed” break that is still helping the team.

Experience Program Objectives

An experience program is a set of activities designed to help measure and drive toward some ideal experience. An ideal on-call experience should be motivating and show clear improvements in availability, reliability, and operational cost. A new on-call experience program, and its related metrics, should be:

- Actionable — both individuals and teams should be able to leverage the metrics to make on-call more pleasant and effective

- Reliable — repeated measurements under similar situations will yield similar measures; this is necessary if individual scores will be aggregated

- Valid — validity takes many forms:

- face validity — program participants and those examining the results will accept the test as an acceptable and agreeable approach

- content validity — content of the program will fit within the overall operational on-call program

- concurrent validity — results from this program will correlate with future programs

- construct validity — results of this program will correlate with operational signals in an anticipated way; for example, the “alert” positive affect will be negatively correlated with at-night alerts and “nervous” will correlate with the use of new, high-risk maintenance actions

- Ratio-like/Scalar-like — the affect metrics are naturally ordinal (ordered), but may not necessarily be scalar (subject to addition/subtraction/percentage change). If we can demonstrate that we can treat the results numerically, the results can then be treated as trends and can be compared across teams

Details for how we measure and implement these objectives are in the Validation section below.

Design

This design is based on the I-PANAS-SF (International Positive and Negative Affect Schedule Short Form) methodology for measuring emotional affect.

On the day after an individual has been on-call for seven or more hours, they will be prompted to answer this survey:

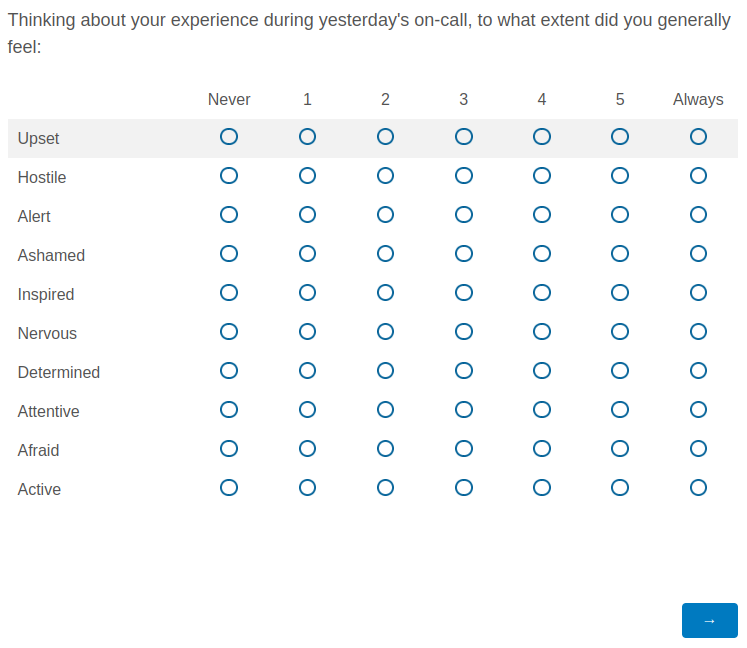

Question: Thinking about your experience during yesterday’s on-call, to what extent did you generally feel:

This will be followed by ten multiple choice questions. The categories (affect categories) are Upset, Hostile, Alert, Ashamed, Inspired, Nervous, Determined, Attentive, Afraid, and Active. For each category, the respondent may be answer never, 1 through 5 inclusive, or always. This question may be asked via a matrix-type question:

Scoring

A choice of “never” has a value of 0; “always” has a value of 6.

Positive Affect (PA) is the sum of values for Alert, Inspired, Determined, Attentive, and Active.

Negative Affect (NA) is the sum of values for Upset, Hostile, Ashamed, Nervous, and Afraid.

The range of PA and NA is 0 to 30 (inclusive).

Applying the program as an individual

An individual may find value using this program, even if they do not share data with anyone else. As a form of affect labeling or putting feelings into words, the process has been shown to promote emotional regulation. This can help individuals reduce stress and mentally focus on constructive solutions.

Studies suggest PANAS scores are stable over time and, although they are influenced by the immediate emotional state, they will regress to an individual’s long-term emotional state. By recording their affect while not on-call, an individual can start to quantify their “base” position and detect how much variability they see in their life, which can help in overall emotional tracking and control.

Applying the program within a team

Even if scores have not been statistically shown as comparable between individuals, as part of their normal operational review cadence, a team can examine the scores (both in aggregate and individual affect) for the previous term. The team should discuss any unusually strong affect, both positive or negative. For instance, the affects of nervous and afraid may reveal risks that the individual feels are not being mitigated.

The team should focus on learnings and actions before attempting to do any statistical analysis. The program requires reflection from the team to do good and the use of quantitative measures is intended to promote thinking, rather than being the end itself.

Applying the program between teams

Although it would be tempting to use the PA and NA scores as proxy signal for manager effectiveness, the raw scores are subject to both individual and cultural differences. Teams sharing their lessons and actions will be more useful.

Over time, if teams show scores are reliable, then the data might be able to be transformed into a normalized and standardized form, such that it can be compared between teams. I anticipate that not all teams are equal and that teams involved in certain types of operations will cluster around certain values, similar to how mean NPS varies between industries.

Rationale and Major Alternatives Considered

I-PANAS-SF and Top-level Design Decisions

The Positive and Negative Affect Schedule (PANAS) is a standardized, well-studied and well-used test for measuring emotional affect. The I-PANAS-SF is a modification of PANAS that reduces the number of questions by eliminating questions that are correlated together as well as changing some of the affect terminology to make scores more consistent across multiple cultures.

I chose a metric based on emotional affect because it can capture both positive and negative feelings and is multi-dimensional, which I anticipate will yield more learnings than a straight intensity score. My initial focus was on measuring pain, but I expect focusing on pain as the measure will bias measures negatively. Furthermore, from my experience, I sometimes looked forward to being on-call, and I think an experience program should try to capture the “full” experience.

Creating new affect categories requires significant data collection, so using existing scales is economically the right choice. Many companies have internationally distributed teams, so using a scale that has been studied cross-culturally and has high-quality translations available should improve the test’s reliability.

Numeric Rating Scale (NRS-11) and other measures of pain intensity

The NRS-11 is a commonly used test for measuring emotional intensity. The test uses an eleven point scale, anchored at zero for “no pain” and ten for “worst pain imaginable”, and can be self-administered.

Administering the NRS-11 would be similar to a Net Promotion Score (NPS) or satisfaction score, through which there are ample commercial solutions. However, there are several limitations in this approach:

- NRS-11 has not shown “ratio” properties, so changes of the number over time should not be interpreted as a linear number. (Some measures that are similar to NRS-11 have shown ratio properties, so a correction may be possible.)

- As a measure of pain intensity, this test is unable to measure positive aspects of on-call

- As a single value, this test does not capture any of the flavor of the pain. The test could be expanded with a free-form response (similar to NPS), but free-form data is difficult to standardize across teams.

McGill Pain Questionnaire and other measures of pain affect

The McGill Pain Questionnaire is a common and well-studied test for pain affect. The test lists various adjectives (affects), with terms grouped by intensity, and the respondent notes which adjectives apply.

Structured for physical pain, about one-third of the affects listed apply to on-call. The test is also purely focused on pain, so it does not track any positive affects or emotions. The pain categories that apply to on-call seem to have analogous affects on the I-PANAS-SF.

Affect Grid

Affect Grid is a single question test where the respondent marks their current emotional state on a two-axis grid, where the dimensions are pleasant/unpleasant and arousal/sedation. Since it only requires a single mark by the respondent, it is one of the fastest tests to conduct. However, I felt it eliminated too much of the flavor of the response.

Rationale for Secondary Design Elements

Prompting for data the day after

Our memories about feelings tend to dampen over time, so ideally we will collect data soon after the event. Collecting after a day, rather than after the rotation, helps standardize time series analysis and comparison against other teams (which may not have the same rotations) and allows teams to see the variance in scores and the impact of events, like incidents, more directly. Alternatively, we could collect data during the on-call (similar to an Experience Sampling Method), which might be the most accurate way of measurement. However, since on-call is usually interrupt driven and the on-call subject may be in the middle of resolving higher priority matters, we should not add to the burden, hence allowing the individual to provide the data on their schedule.

Prompting for data only if on-call duration exceeds seven hours

It is fairly common for someone to override another person’s schedule. In my experience, if the override is fairly short, then the person taking the override is likely just monitoring for alerts and is thus experiencing a subset of the full on-call experience. Eliminating these periods from data collection should enhance data quality and reduce fatigue from answering the survey.

Participants

The expectation is that participants in this program will be adult professionals, most likely with college education, or at least literate. The individuals will be familiar with using a computer and the question will be provided in one of their “work place” languages. Individuals within a pool may differ by gender and nationality.

Based on these assumptions, we need a test that is robust/valid across different population groups, although each group will be literate adults.

Program Validation

Demonstrating Reliability

There are four primary ways to evaluate reliability: test-retest, parallel forms, split-half, and internal consistency. We posit that the “true on-call experience” is measured on the working days (this would the weekdays for a weekly on-call cadence) and that, although each day is unique, they can be treated as multiple repeated “samplings” of an experience. Thus, we can use the test-retest methodology for evaluating reliability.

Grouping consecutive days with recorded values from the same individual producing pairs of \((x, y)\), and appending pairs for individuals in the same team, we calculate the Pearson product-moment correlation coefficient via:

$$ r = \frac{\sum_{i=1}^{n} (\frac{x_i - \bar{x}}{s_x})(\frac{y_i - \bar{y}}{s_y})}{n - 1} $$

where \(s_x\) and \(s_y\) are the standard deviation of the x and y values. The correlation coefficient \(r\) can be considered the reliability metric.

We aim for reliability of 0.7 or greater, which is the expected reliability of personality tests. Collecting 30 observations per individual on the team (which, given observations are collected daily, implies at least 30 days of on-call) should be sufficient for starting statistical analysis.

Demonstrating Validity

Face Validity

Face validity requires participates to take the effort seriously. This program is not designed to handle adversarial participants. This article, by being open and available to participants, should aid in face validity since participants can understand the intent, design decisions, and proposed analysis process. Informed participants can decide if the approach is sound and potentially useful.

Content Validity

Content validity will be shown if teams use and continue to use the program within their operational program. If the program generates actionable insight, it will be shown in their weekly reports.

Concurrent Validity

Since the author is unaware of existing on-call experience metric programs, concurrent validity is an aspirational goal that future experience programs will have statistically similar measurability. If there were existing programs, part of the validation effort would be to compare results against those programs.

Construct Validity

I hypothesize that:

- PE will correlate with the closing of operational improvement tickets (tickets created by team to improve operations)

- NE will correlate with incidents

- The affects of Nervous and Afraid will increase ahead of major operational items (e.g. non-standard database migrations, data center moves)

- The Alert affect will negatively correlate with off-hours alerts (although some bucketing/combining of alerts by time may be required)

Further Reading

Dennis C. Turk (Ed.) and Ronald Melzack (Ed.). 2001. Handbook of Pain Assessment (2nd. ed.). The Guilford Press, New York, NY.

John Rust and Susan Golombok. 1999. Modern Psychometrics: The Science of Psychological Assessment (2nd. ed.). Routledge, New York, NY.