How User Groups Made Software Reuse a Reality

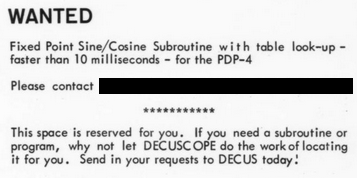

Before the widespread existence of software repositories like CPAN, NPM, and PyPI, developers seeking to reuse an existing algorithm or library of routines would either check books or journals for code, or, they just might post a classified ad:

User groups provided catalogues of software, from mathematical algorithms to system utilities to games and demos. Leveraging the user group’s periodicals, developers could post requests for specific examples of code. Or, more frequently, developers would review catalogs for existing solutions. They would contribute by sending their own creations to the group for others to use.

In this article, we will examine how these user groups coordinated development and shared code, how they promoted discoverability of software, and how they attempted to maintain a high bar of quality.

Origins

While the importance of a set of reusable subroutines to reduce the cost of development was noted in (Goldstine 1947) and the first set of published subroutines came out in (Wheeler 1951), the lack of standardization between computers and sites meant that it was concepts that were being shared, not code. Reuse implied porting code between architectures and languages.

By the mid-1950s, computers had shifted from a research and bespoke creation, where an organization might build their own computer, to one where manufacturers sold multiple copies of the same model of computer. IBM announced the 701 or “Defense Calculator” in 1952 and installed it in nineteen sites. For business computing, the IBM 650 first shipped in December 1954 and eventually saw over 2,000 installations.

With multiple instances of the same machine model, programs and practices could be replicated directly between sites.

Faced with a programmer shortage, productive utilization of their 701s as low as 60%, and the coming obsolecense of the 701s by the 704s, a few West Coast aerospace companies decided to cooperate on a Project for the Advancement of Coding Techniques (PACT) (Malahn 1956). Formed in November 1954, they designed and developed a coding preprocessor to automate repetitious coding efforts. By June 1955 an implementation was available. Members of the project reported initial success and started evangelizing both the techniques used in the project and the cooperative nature by September 1955 (Greenwald 1956).

In August 1955, the first cooperative user group, SHARE, was formed. The formative group of seventeen installations were all users of the IBM 701 and were anticipating the transition to the 704. The listed advantages for joining included “[…] do considerably less programming and checkout of utility routines, mathematical routines, and complete systems”. In addition, the group represented “authoritative customer opinion” and thus promised greater influence on IBM’s product plans (SHARE 1956).

Obligation of SHARE membership included “have a cooperative spirit” and “respect for the competence of other members”. Furthermore, each member site was expected to attend meetings with at least two members, one with a technical understanding of the system and another with the ability to commit resources. They also were expected to promptly answer the mail and keep the Secretary informed of any delays in their programming assignments. Programs were distributed as type-written copies of the documentation and code.

The Univac Scientific Exchange, or USE, was founded in January 1956. This group was initially focused on the 1103A, Univac’s competitor to the 704. Similar to PACT and SHARE, they proposed using a similar “minimum standard assembly” language and cooperatively developing and sharing programs. The list of proposed programs included function subroutines, matrix, linear programming, tape handlers, and data output. The committee deferred developing of data input and post mortems utilities until a common language had been adopted (USE 1956).

Of the proposed programs, USE volunteers submitted and accepted seven work assignments. USE members checked the programs on a 1103. The subroutines were for exponential calculations, logarithms, square root, sine/cosine, arc sine, and arc tangent (USE-c 1956).

DECUS or the Digital Equipment Computer Users Society, which eventually became the largest user group, was formed in 1961. As DEC’s first computer was only delivered in November 1960, this demonstrates that customers saw immediate value in joining these groups. By 1971, there were sixteen operational user groups.

Controlling Quality

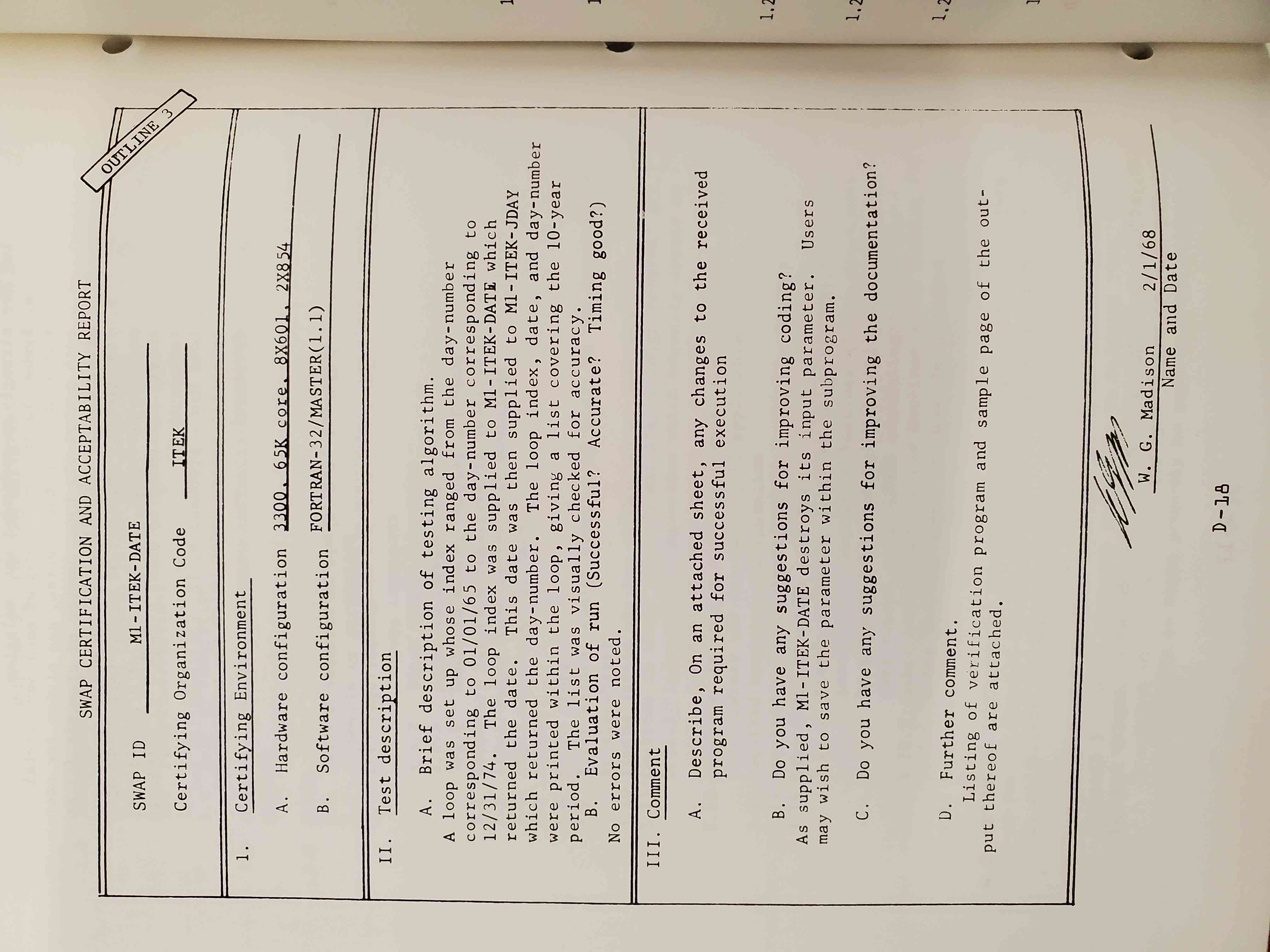

As user groups assembled their initial catalogs of algorithms, they were concerned about the accuracy, efficiency, and documentation of the submissions. Since authors and their employers were recorded in the catalog, authors were expected to be highly conscientious about their submissions. After code was submitted, it was then normally sent to a second person who would verify the code worked as expected on a different set of hardware. A third person would then verify that both the submitter and checker had performed their duty and signed off on the result.

A typical form for this process is from the SWAP user group (SWAP 1968). SWAP was the user group for Control Data Computer systems.

The expectations for documentation quality at SHARE and USE were robust. The proposed documentation sections (USE-b 1956) were:

- Identification

- Title

- Author - Date

- Installation

- Purpose

- Method

- Accuracy

- Range

- Derivation or Reference

- Usage

- Calling Sequence

- Control Data

- Operational Procedure

- Space Required

- Error Codes

- Format Received or Generated if an Input-Output Routine

- Restrictions

- Components required other than minimum 1103A

- Other Programs Required

- Data

- Card Form, Tape Format

- Wiring Diagram or Board Used, if a Print Routine

- Coding Information

- Constraints

- Erasable Input-Output Locations

- Time

SHARE required the same list of top-level sections (although in slightly different order), plus a “Checkout” section to describe tests performed by the author that could be replicated to verify correct duplication or porting of the code.

As an example of timing information, MTI-0, a linear matrix equation solver, provided the machine time in milliseconds as (USE-b 1956):

.3n^3 + .9n^2 * m + 1.7n^2 + .3m^2 + 2.5nm + 1.8n + 1.6m + 2.7

The write-up for MTI-0 required eight typewritten pages. The author took five pages for documentation and three for the source code. Perhaps unusually, the code was both written and checked by the same person, while in the other cases the author observed distinct individuals.

While user groups attempted to hold a high bar for quality, none went so far as to guarantee the fitness of software. In a July 1956 memo, SHARE adopted the following disclaimer on all programs:

Although this program has been carefully tested by its contributor, no guarantee is made of its correct functioning under all conditions, and no responsibility is taken by him in case of possible failure.

Although the review system seems to have been robust for SHARE and USE, it seems not all user groups adopted it. DECUS had formed a catalog of about twenty programs early in 1962. However, as reported in the Nov 1962 (Vol I, No 8) issue of Decuscope, the organization had suspended distribution of the tapes due to reported corruption issues. As documented in January 1963, their method of duplicating paper tapes was unreliable, requiring them to reassemble programs. DECUS continued to struggle with paper tape corruption throughout the year as a letter in the Nov 1963 newsletter notes that the tape has garbage at the end, visible labels causing checksum errors, and out of spec spacing of holes.

For DECUS, January 1963 also saw the beginning of their system of certification which required two checkers. A letter to the editor asked “how to induce users to report program failures and how best to utilize such reports.” The author was also concerned about free-loaders and advocated to “establish punitive measures for failure to contribute to the ‘certification’ process.”

By 1966, though, DECUS saw a need to distribute software with less overhead. In Vol 5, Issue 5, the editors launched a new section “Available from Authors” to allow advertisements of software that lacked certification. Authors could advertise software while it was still being debugged or lacked full documentation, but was sufficiently complete to be useful.

A year later, DECUS expanded its advertising policy yet again by allowing commercial software to be listed. Only corporations were allowed to submit programs, not individuals. (Individuals could only post no-cost programs.) As a quality measure, DECUS stated it would act as a “repository for complaints” but stated it “will not ordinarily investigate complaints”.

The first commercial advertisement might have been in Volume 8, Issue 2 (1969) with a routine for controlling a Calcomp plotter.

In 1979 (Issue 6), the DECUS certification process seems to have gone away, as instructions on submitting programs no longer mention it. Submitting a program required only a small amount of documentation (enough to populate a catalog entry) and specified how to provide the code for reproducibility.

There was a resurgence of quality concerns in 1983 (Issue 4) with the launch of a new “DECUS Library Program Quality Assurance”. This program’s objective was to verify programs worked as advertised, focusing on the PDP-11. Volunteers were again asked to perform the evaluation. A pilot program evaluated 25 programs, finding seventeen still useful and marked for retention while recommending eight for archival. Rather than being a quality assurance program for individual programs, this was a process for cleaning up the catalog. Demonstrating the human discretion required, an archival decision required more context than just the age and lack of updates to a program.

The multipage form for submitting programs in the 1990 DECUS catalog did not include any questions on testing performed by the author or require any checks by third-parties. Further, the role of DECUS was defined as a “clearinghouse” and they took no position on the quality of software.

This trajectory was influenced by two trends. One, manufacturers were delivering much more software with the computer and thus were responsible for the core functionality. For instance, manufactures supplied operating sytems, compilers, and standard libraries. Customers no longer expected to write their own math routines. This also meant customers relied more on the manufacturer and less on each other. Two, the volume of software being developed and shared exceeded the user group’s ability to review and control it. Economically, most of the collected fees went for basic distribution and administration of conferences. There were few volunteers to review code and little money to incentivize people.

Promoting Discoverability

The first computer science textbook, The Preparation of Programs for an Electronic Digital Computer included an appendix of programs developed for the EDSAC (Wheeler, 1951). Wheeler et al. organized the programs into a hierarchy based on their application area, each area denoted by a single letter. USE and SHARE adopted very similar schemes (Table 1 and 2, (USTB 1958) (Balstad 1975), respectively).

Table 1: Top-level Revised Univac Scientific Routine Index, 1958

| Section | Category |

|---|---|

| 1 | Programmed Arithmetic |

| 2 | Elementary Functions |

| 3 | Polynomials and Special Functions |

| 4 | Differential Equations |

| 5 | Interpolations and Approximations |

| 6 | Matrices, Vectors, Simultaneous Linear Equations |

| 7 | Statistical Analysis and Probability |

| 8 | Operations Research and Linear Programming |

| 9 | Input Routines |

| 10 | Output Routines |

| 11 | Executive Routines |

| 12 | Information Processing |

| 13 | Debugging Routines |

| 14 | Simulation Programs |

| 15 | Diagnostic Routines |

| 16 | Service Programs |

| 17 | All Others |

Table 2: Top-level SHARE Classification Scheme, 1965

| Code | Category |

|---|---|

| A | Arithmetic Routines |

| B | Elementary Functions |

| C | Polynomials and Special Functions |

| D | Operations on Functions and Solutions of Differential Equations |

| E | Interpolation and Approximations |

| F | Operations on Matrices, Vectors, and Simultaneous Linear Equations |

| G | Statistical Analysis and Probability |

| H | Operations Research Techniques, Simulation and Management Science |

| I | Input |

| J | Output |

| K | Internal Information Transfer |

| L | Executive Routines |

| M | Data Handling |

| N | Debugging |

| O | Simulation of Computers and Data Processors; Interpreters |

| P | Diagnostics |

| Q | Service or Housekeeping; Programming Aids |

| R | Logical and Symbolic |

| S | Information Retrieval |

| T | Applications and Application-oriented Programs |

| U | Linguistics and Languages |

| V | General Purpose Utility Subroutines |

| Z | All Others |

Within the 1950s and to the mid-1960s, each user group’s focus was on code that a developer could copy into their own programs, rather than free-standing programs. There were full-fledged programs and utilities available, such as assemblers, editors, and maintenance tools, but they were in the minority.

In 1961, the Joint Users Group (JUG) organized itself within the the Association for Computing Machinery. JUG sought to close the “software gap”, the time and effort required between specification and implementation. By 1971, JUG worked with 16 different user groups to assemble a directory, with well-defined metadata, of software available on the various systems. Computer Programs Directory was published first in 1971 with a second volume following in 1974. These directories represent a snapshot in time of software being exchanged within user groups as well as what developers thought others might find useful.

The 1974 directory included a classification system (adopted in 1972) that expanded the list with many application areas, but maintained categories from the earlier SHARE and WWG list. There was a need for application areas as user members were increasingly sharing full programs rather than individual routines. In the 61 Education category, the directory lists twenty programs under Demonstrations (61.1), thirty-five programs under Problem Solving (61.2), and twenty-one programs under Record Keeping (61.3). Most of these programs were written in BASIC.

Table 3: Top-level Program Library Classification Code, 1974

| Code | Category |

|---|---|

| 00 | Utility (External) Programs |

| 01 | Utility (Internal) Programs |

| 02 | Diagnostics |

| 03 | Programming Systems |

| 04 | Testing and Debugging |

| 05 | Executive Routines |

| 06 | Data Handling |

| 07 | Input/Output |

| 10 | Systems Analysis |

| 11 | Simulation of Computer and Components |

| 12 | Conversion of Programs and Data |

| 13 | Statistical |

| 15 | Management Science/Operations Research |

| 16 | Engineering |

| 17 | Sciences and Mathematics |

| 18 | Nuclear Codes |

| 19 | Financial |

| 20 | Cost Accounting |

| 21 | Payroll and Benefits |

| 22 | Personnel |

| 23 | Manufacturing |

| 24 | Quality Assurance/Reliability |

| 25 | Inventory |

| 26 | Purchasing |

| 27 | Marketing |

| 28 | Sales Entered and Billed |

| 29 | General Business Services |

| 30 | Demonstration and Games |

| 40 | Arithmetic Routines |

| 41 | Elementary Functions |

| 42 | Polynomials and Special Functions |

| 43 | Operations on Functions and Solutions of Differential Equations |

| 44 | Interpolation and Approximations |

| 45 | Operations on Matrices, Vectors, and Simultaneous Linear Equations |

| 50 | Insurance |

| 61 | Education |

| 62 | Literary Data Processing |

| 63 | Humanities |

| 71 | Hybrid Computing |

| 72 | Time Sharing |

| 99 | Miscellaneous |

What languages did contributors use? Drawing from JUG 1971 and 1974 using a random sampling approach, the author sampled 193 programs and counted by the primary computer language (Table 3). In cases where a language had multiple versions (e.g. FORTRAN II is distinct from Fortran 66), the author simplified to the “parent” language name. The author also grouped together all assembly languages.

Table 4: Programming Language Count and Percentage from the JUG listings

| Language | Count | Percentage |

|---|---|---|

| FORTRAN | 73 | 38% |

| Assembly (incl. MACRO & COMPASS) | 49 | 25% |

| PAL (Pedagogic Algorithmic Language) | 20 | 10% |

| Unspecified | 11 | 6% |

| BASIC | 10 | 5% |

| FOCAL | 10 | 5% |

| LAP6 | 5 | 3% |

| ALGOL | 4 | 2% |

| Wang | 4 | 2% |

| COBOL | 3 | 2% |

| ASPER, CODAP-1, CP, DIAL | All n=1 | 2% |

As the majority of computer sites were performing technical work, it is unsurprising that FORTRAN, as the first high-level language targeting engineering and scientific use cases, counts for nearly 40% of the programs. FORTRAN also had the advantage of being supported on multiple platforms, rather than being a vendor-specific language. Assembly counted for approximately one quarter of the programs, but by this point, programs shipped in assembly focused on hardware drivers or low-level equipment tests and tools. The large amount of programs written in PAL can be contributed to their educational lesson focus and that large numbers of lessons could be cranked out with little effort. FOCAL and BASIC competed in the same niche, and were similar syntactically and functionally, but FOCAL was DEC-specific while BASIC was ported between many architectures. LAP6 was the language for the LINC personal workstation. Although there were only 50 workstations built, the LINC community were especially enthusiastic about sharing what software they wrote.

Perhaps to the chagrin of the ACM, ALGOL barely registered even though it was designed for cross-platform use and there were compilers available for most architectures. Similarly, COBOL barely registers on the list. ACM anticipated greater sharing of business related software as shown by their adding categories like “27 Marketing”, but the actual directory showed only minimal activity in such categories. The activity that existed were more likely to be BASIC programs, such as one for handling personnel recruiting workflows.

Wang and the other items in the table were vendor-specific languages.

Since DECUS was the largest user group and contributed the largest number of items to the JUG directory, we would expect the table to be biased towards languages available on the PDP minicomputers.

Protecting Software Integrity

The initial threats to software integrity were fairly prosaic: errors introduced by manual copying of code from one medium to another and the degradation of storage over time. To combat this, documentation included steps for verifying and checkout out code to ensure it was copied correctly.

As user groups acquired duplicating machinery, they started distributed software via paper tape. However, paper tape was error prone, as were the technologies used to process it. In the 1966 Volume 5, Issue 2 of DECUScope, a review of the ASR-33 stated it was a “weak link in the system in both speed and reliability” and “is quite capable of preparing a tape with a checksum error”. (The Teletype Corporation would improve the reliability of the ASR-33 over the next nearly two decades life of the product.)

Magnetic tape was more reliable, but also more expensive. Floppy diskettes were first commercially available in 1973 and by the late 1970s were supported extensively in minicomputer and microcomputer models. In 1978, 5 1/4 diskettes had fallen to $1.50 in price in bulk or $7 in 2022 dollars. Reliability improved, but replication costs still made mass distribution expensive.

A typical practice, recommended as early as (Wheeler 1951), was to maintain master copies of software which were not to be used directly, but rather copied from time to time to tapes in use.

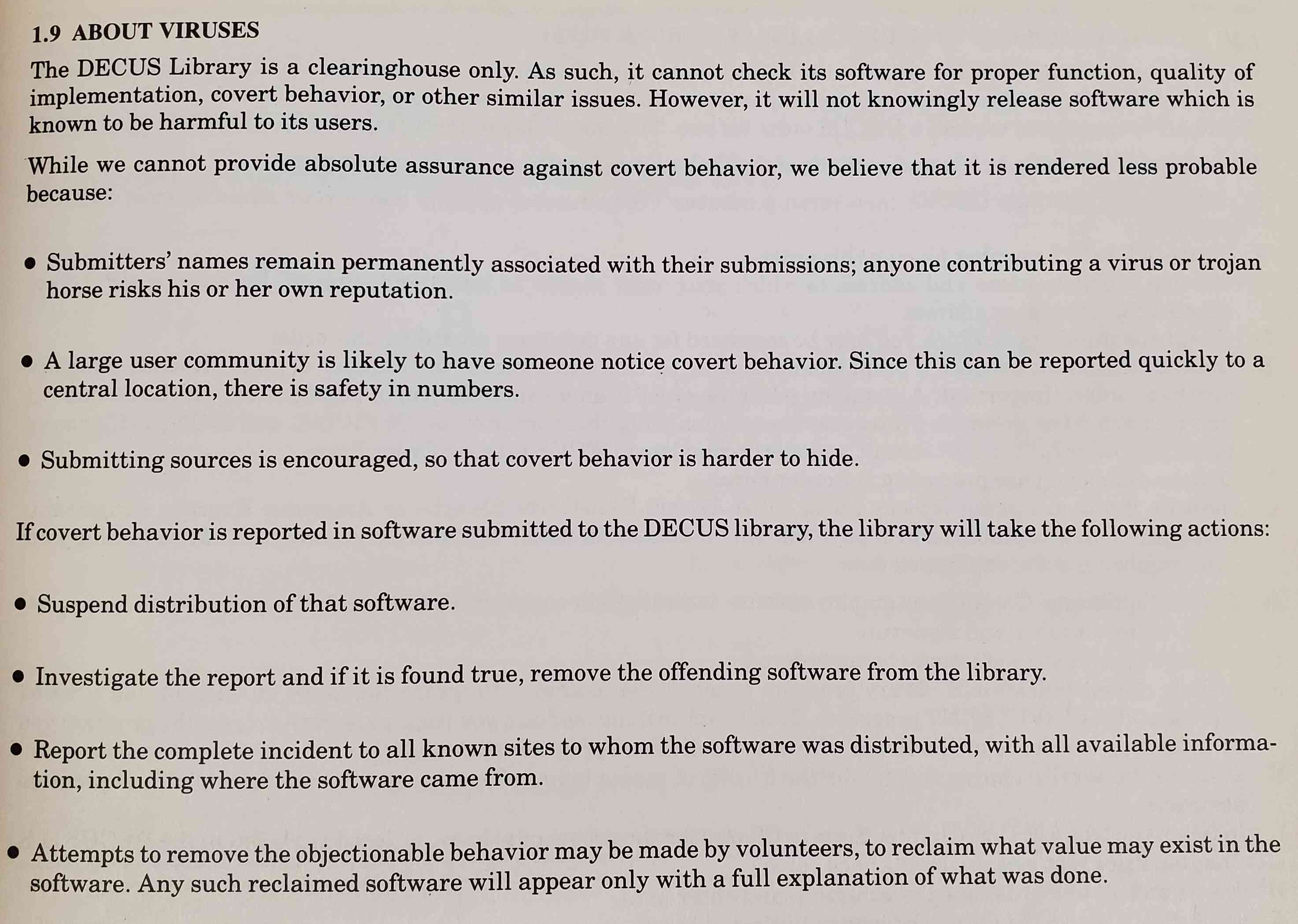

Although improved media reliability and checksums improved the integrity of software distribution, these measures did not ward against adversarial attacks. In the Winter/Spring 1990 DECUS program library, the editors included this note about viruses:

Within this policy we see the continuation of norms from SHARE, that of quality and good behavior coming from the developer’s desire to maintain their reputation. This norm was likely reinforced because user groups did meet face-to-face at conferences. However, based on the submission forms, there were few controls against impersonating another person.

Based on documents the author has reviewed, supply chain adversarial attacks were not a concern until the late 80s. Instead, the user groups expected members to follow norms and avoid inadvertent or intentional harm to other members.

Comparison to Modern Repositories

In “Software Parts Nostalgia”, Robert C. Glass (Glass 1981) argues that SHARE worked because:

- Available to everyone (within a site)

- “Effective parts taxonomy” and “effective delivery document”

- Pride in software authorship

- Not available elsewhere

Do these factor apply to a online software repository?

A modern software respository is more available than software through a user group as they simply require an internet connection — no need to prove you own a specific machine or pay any dues. Further, new software and updates can be made accessed within seconds. This level of availability may be a handicap as the bandwidth costs can be expensive and the sites operate in a hostile environment with constant denial of service attacks. However, compared to sending physical media, websites are multiple orders less expensive.

Modern tooling greatly reduces the friction of adding a dependency to a project and tracking updates, but finding and selecting a library is largely an adhoc process by the developer leveraging search engines and popularity metrics. There are still attempts at a taxonomy, for example crate’s list for Rust, but these are not fine-grained. Modern repositories place greater weight on free-form search than taxonomies. When user groups were sharing paper catalogs, reverse indexed search was not an option.

The MTI-0 write-up and similar documents in the SHARE and USE binders are models of documentation and are analagous to a Unix man page. However, as these programs are really just functions and typically written in assembly, their scope is limited and much of the documentation would be unnecessary if the code was written in a higher-level language. Over time, user groups reduced their expectations on documentation, but user groups also were sharing fewer routines and more stand-alone programs.

Pride is a difficult subject to measure as are the feelings of responsibility, reputation, and ownership. Within the early days of SHARE and USE, a developer would be releasing code to colleagues that met face-to-face at least monthly. Not only could poor quality code lead to ostracization or reduced influence within the group, but high quality code could lead to better jobs and enhanced status. By 1981, when Glass was writing, “software parts” were more likely released commercially by a company. Individual contributions were anonymized, although quality might still be recognized and rewarded within a company.

Alternatives to anonymity, however, were quickly changing with the beginnings of the Free and Open Source movements (GNU was first announced in 1983; OSI formed in 1998). Contributions from individual authors were public and trackable, particularly with software project management sites such as SourceForge, launched in 1999. While public recognition for contributions can be socially rewarding, simply releasing code or a patch is insufficient. Software repositories have ample packages that are buggy, abandoned, and unfit for consumption. Modeled as a clearinghouse, similar to DECUS finding a need to remove obsolete software from their catalog, clearinghouses will fill with junk over time.

Due to network effects and economic efficiency, there tends to be a single repository per programming language – e.g. PyPI for Python, Central Repository for Java, Rubygems for Ruby, NPM for JavaScript. (An organization will often run a private repository for their internal development, but they will share software via an external repository.) The switch from repository per manufacturer to repository per language started with CTAN, the Comprehensive TeX Archive Network, which inspired the very popular CPAN or Comprehensive Perl Archive Network. Officially announced in 1993, CTAN consolidated via mirrors and tooling TeX macros previously distributed across many sites. The balkanization of software sources, which had greatly increased once software started to be unbundled from hardware in 1969, began to reduce with centralized systems (even if they used distributed mirrors).

Lessons for Modern Development

- Communities can rapidly fill missing documentation and features

If a community can come together and organize, they can effectively defend their interests and create solutions. The computer manufacturers of the time helped the user groups grow by including notices for them when they sold a machine, whether the user group was directly managed by the manufacturer or not.

- Mirrors are great for availability

The answer to code availability and corruption issues has always been local copies. External dependencies should be stored within a caching proxy or similar mirroring system.

- Curatorship is more expensive than development

Programmers may be in short supply, but programmers have consistently shown greater willingness to volunteer their programming output versus their skills in reviewing and auditing other’s output. While there are groups that have a tradition of deep review (e.g. netlib, openbsd), the user groups struggled to maintain a similar review system past a small set of ‘standard’ libraries. Instead, it seems less expensive to let the consumers try to determine fitness and quality themselves and attract customers with the quantity of available software.

Acknowledgements

I’d like to thank the Charles Babbage Institute for their assistance with the research.

References

(Balstad 1975): John Bolstad. 1975. A proposed classification for computer program library subroutines. SIGUCCS Newsl. 5, 2 (May 1975), 25–37. https://doi.org/10.1145/1098881.1098882

(Glass 1981): Robert C. Glass. 1981. Software Parts Nostalgia. Datamation, Vol 27, (Nov. 1981), 245-247.

(Goldstine 1947): Herman Goldstine and John Von Neumann. Planning and coding of problems for an electronic computing instrument, Vol. 1. Inst. for Advanced Study, Princeton, N. J, Apml 1, 1947 (69 pp.). (Reprinted in von Neumann’s Collected Works, Vol 5, A H Taub, Ed., Pergamon, London, 1963, pp. 80-151.)

(Greenwald 1956): I. D. Greenwald and H. G. Martin. 1956. Conclusions After Using the PACT I Advanced Coding Technique. J. ACM 3, 4 (Oct. 1956), 309–313. https://doi.org/10.1145/320843.320850

(Melahn 1956): Wesley S. Melahn. 1956. A Description of a Cooperative Venture in the Production of an Automatic Coding System. J. ACM 3, 4 (Oct. 1956), 266–271. https://doi.org/10.1145/320843.320844

(SHARE 1956): John Greenstadt, Editor-in-Chief. SHARE Reference Manual for the IBM 704. 1956. https://www.piercefuller.com/scan/share59.pdf

(SWAP 1968): Users Organization for Control Data Computer Systems, Handbook, Part III: Program Catalog, (June 1968). https://archives.lib.umn.edu/repositories/3/archival_objects/1616775

(USE 1956): Minutes of Meeting of USE, Seattle WA. (9-10 January 1956). https://archives.lib.umn.edu/repositories/3/resources/19

(USE-b 1956): Minutes of Meeting of USE, St. Paul MN. (15-16 February 1956). https://archives.lib.umn.edu/repositories/3/resources/19

(USE-c 1956): Minutes of Meeting of USE, Van Nerys CA. (29-30 March 1956). https://archives.lib.umn.edu/repositories/3/resources/19

(USTB 1958): USTB-5 Revised Univac Scientific Routines Index. (January 31 1958). https://archives.lib.umn.edu/repositories/3/resources/19

(Wheeler 1951): David John Wheeler, et al. The Preparation of Programs for an Electronic Digital Computer: With Special Reference to the Edsac and the Use of a Library of Subroutines. Reprint of the ed. 1951 ed. Addison-Wesley 1951.

Appendix

DECUS ‘About Viruses’ Policy (1990)

The DECUS Library is a clearinghouse only. As such, it cannot checks it software for proper function, quality of implementation, covert behavior, or other similar issues. However, it will not knowingly release software which is known to be harmful to its users.

While we cannot provide absolute assurance against covert behavior, we believe it is rendered less probable because:

- Submitters' names remain permanently associated with their submissions; anyone contributing a virus or trojan horse risks his or her reputation.

- A large user community is likely to have someone notice covert behavior. Since this can be reported quickly to a central location, there is safety in numbers.

- Submitting sources is encouraged, so that covert behavior is harder to hide.

If covert behavior is reported in software submitted to the DECUS library, the library will take the following actions:

- Suspend distribution of that software.

- Investigate the report and if it is found true, remove the offending software from the library.

- Report the complete incident to all known sites to whom the software was distributed, with all available information, including where the software came from.

- Attempts to remove the objectionable behavior may be made by volunteers, to reclaim what value may exist in the software. Any such reclaimed software will appear only with a full explanation of what was done.